We present AlphaOne (![]() ), a universal framework for modulating reasoning progress in large reasoning models (LRMs) at test time.

), a universal framework for modulating reasoning progress in large reasoning models (LRMs) at test time.

![]() first introduces

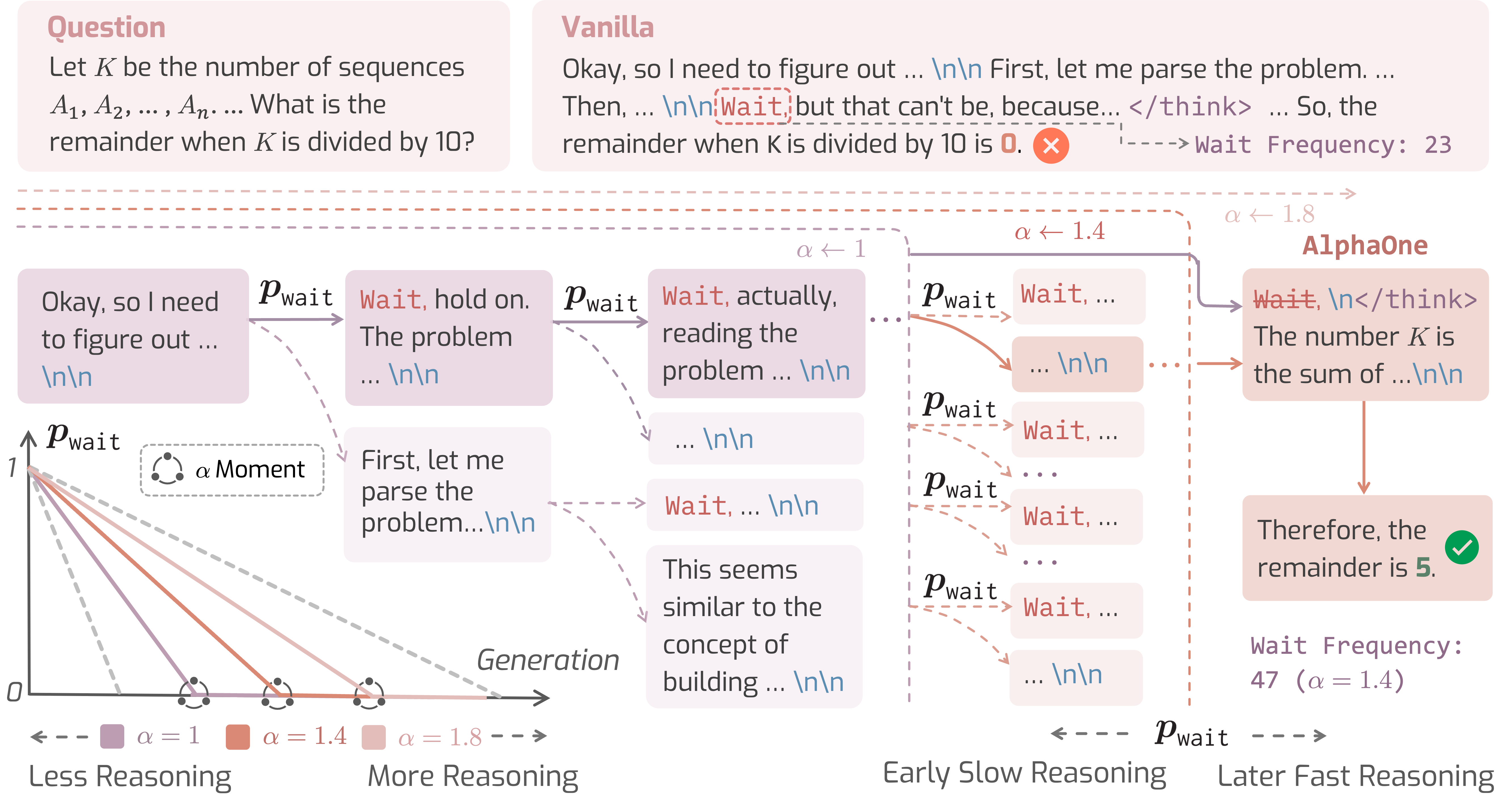

first introduces ![]() moment, which represents the scaled thinking phase with a universal parameter

moment, which represents the scaled thinking phase with a universal parameter ![]() . Within this scaled pre-

. Within this scaled pre-![]() moment phase, it dynamically schedules slow thinking transitions by modeling the insertion of reasoning transition tokens as a Bernoulli stochastic process.

After the

moment phase, it dynamically schedules slow thinking transitions by modeling the insertion of reasoning transition tokens as a Bernoulli stochastic process.

After the ![]() moment,

moment, ![]() deterministically terminates slow thinking with the end-of-thinking token, thereby fostering fast reasoning and efficient answer generation.

deterministically terminates slow thinking with the end-of-thinking token, thereby fostering fast reasoning and efficient answer generation.

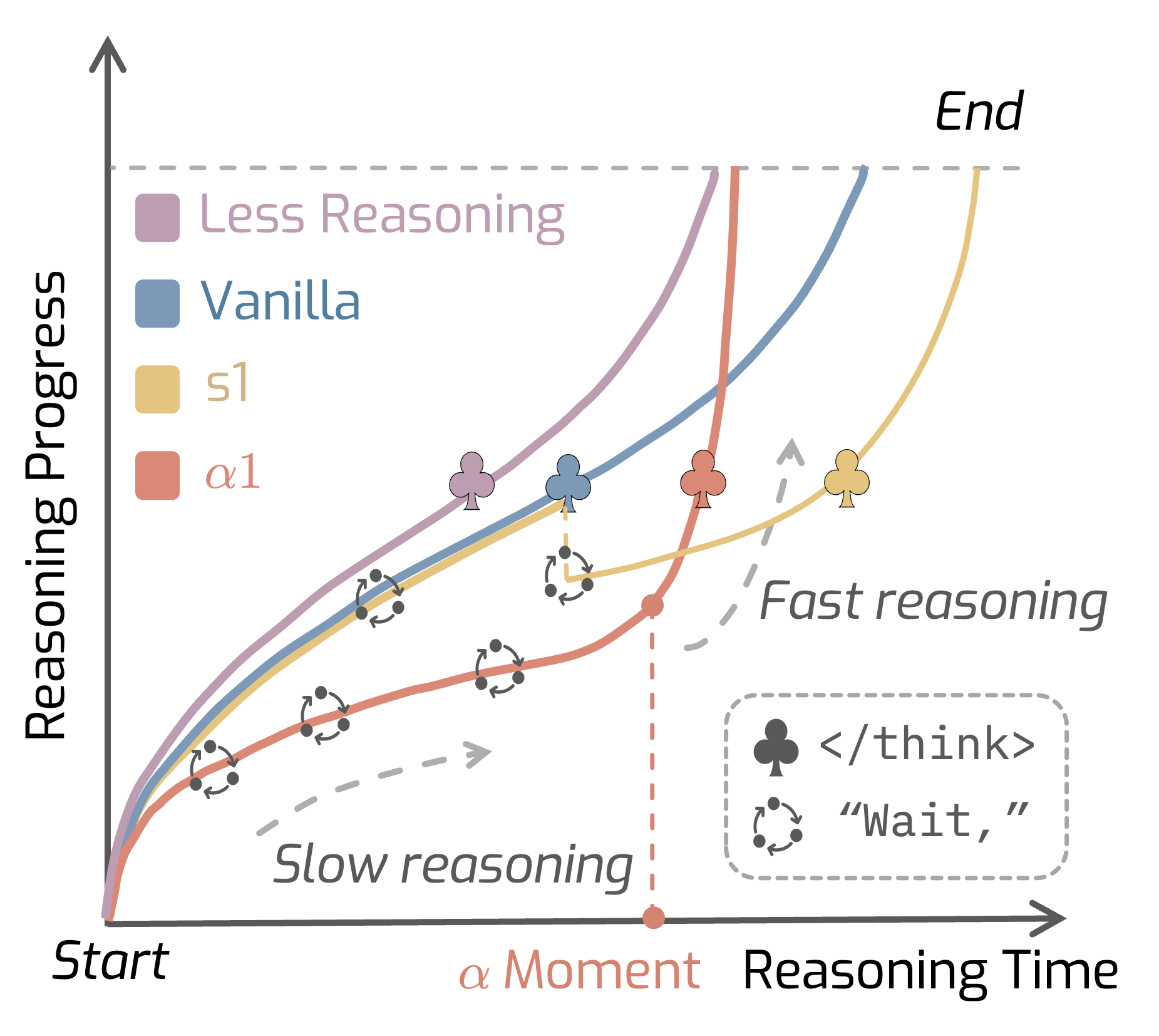

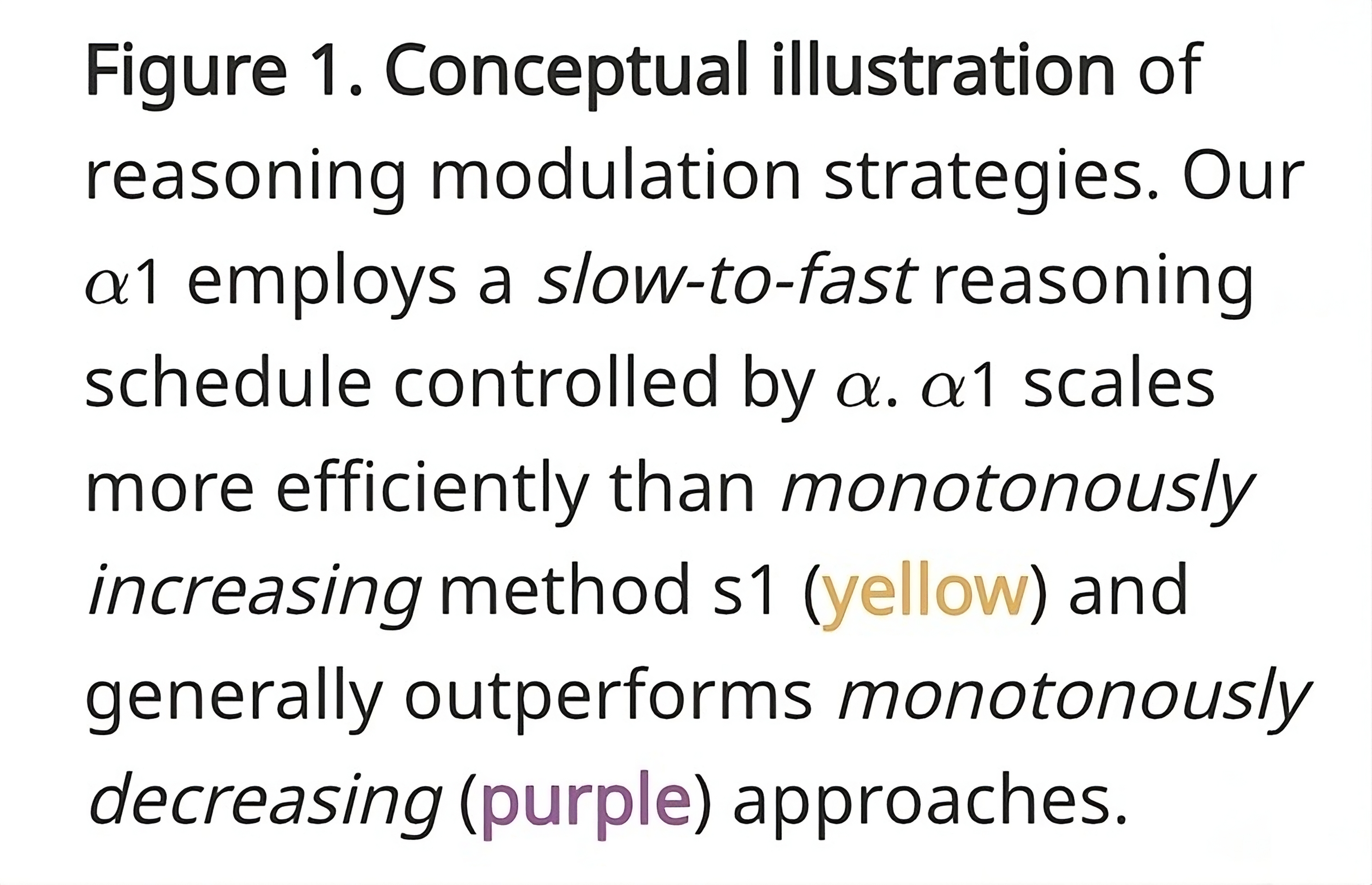

This approach unifies and generalizes existing monotonic scaling methods by enabling flexible and dense slow-to-fast reasoning modulation, while offering critical insights into the joint optimization of reasoning capabilities and computational efficiency.

). Here

). Here  represents

represents  moment.

moment.

![]() applies dense reasoning modulation via a user-defined slow thinking scheduling in pre-

applies dense reasoning modulation via a user-defined slow thinking scheduling in pre-![]() moment.

In addition,

moment.

In addition, ![]() utilizes a post-

utilizes a post-![]() moment modulation by replacing slow thinking transitioning tokens "

moment modulation by replacing slow thinking transitioning tokens "wait" to "</think>", which fosters fast thinking.

Specifically, ![]() determines when the slow-to-fast reasoning transition occurs. For example, reducing

determines when the slow-to-fast reasoning transition occurs. For example, reducing ![]() from 1.4 to 1.0 shifts the

from 1.4 to 1.0 shifts the ![]() moment earlier, resulting in shorter slow reasoning phase and accelerating the annealing of

moment earlier, resulting in shorter slow reasoning phase and accelerating the annealing of ![]() .

.

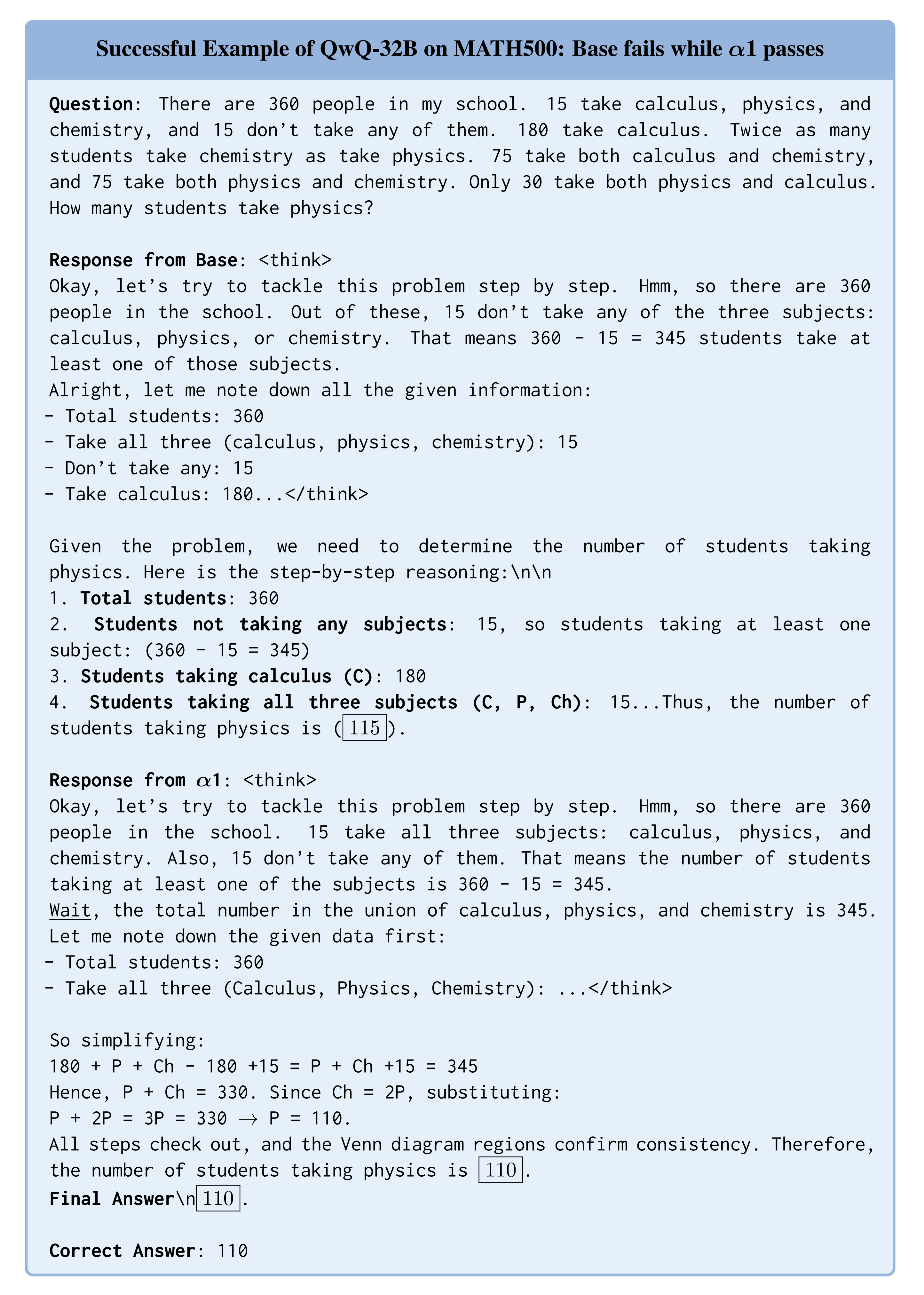

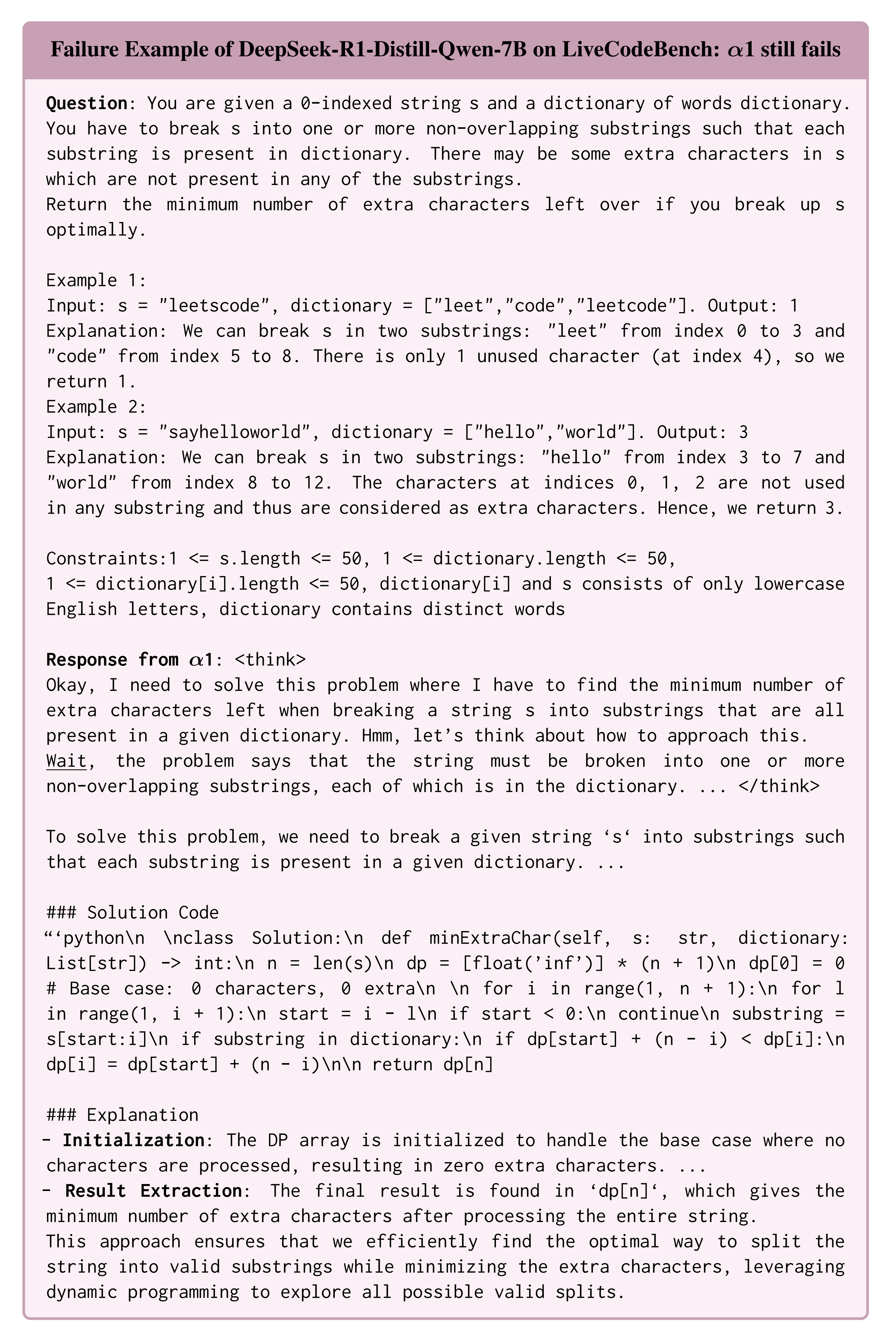

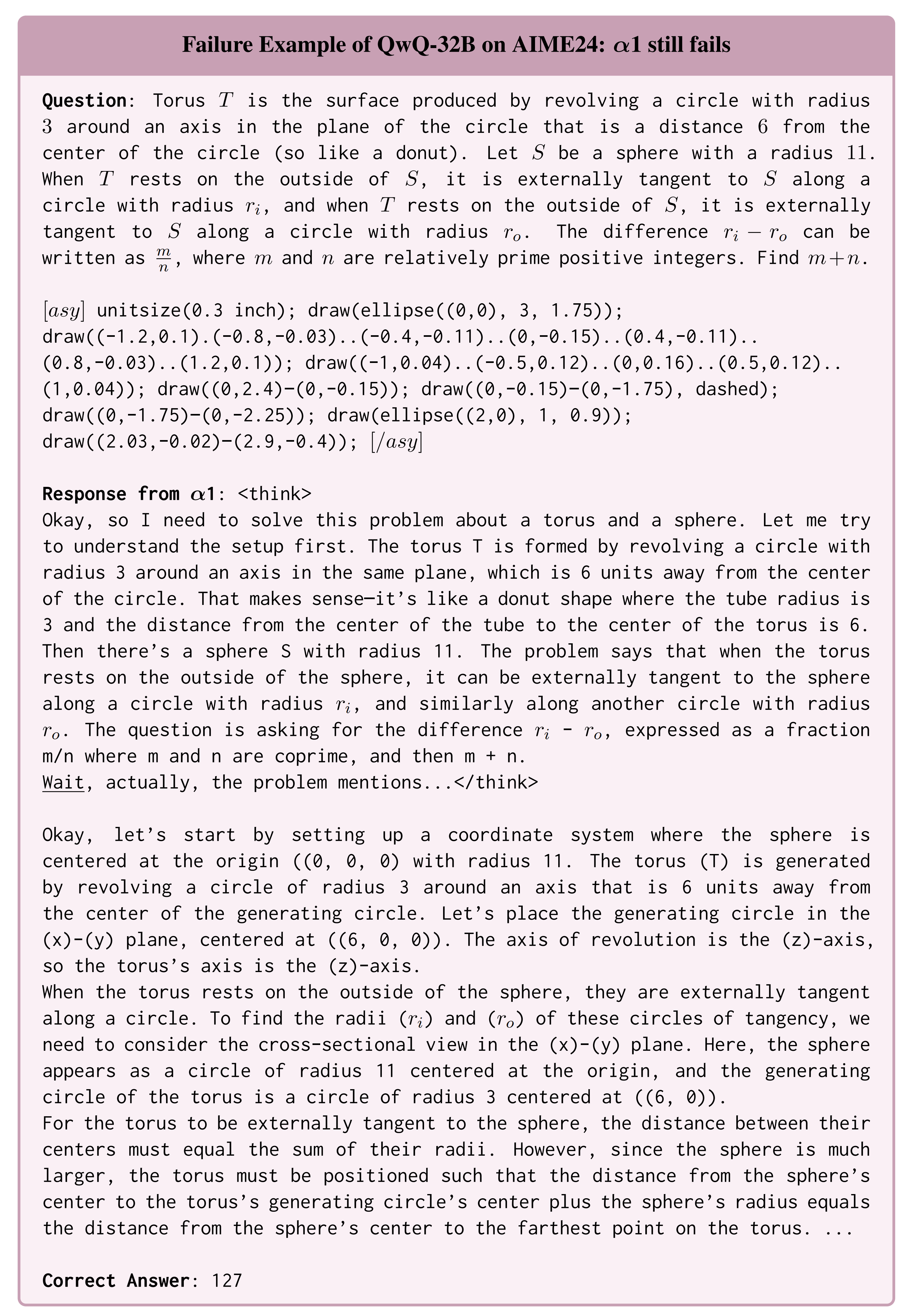

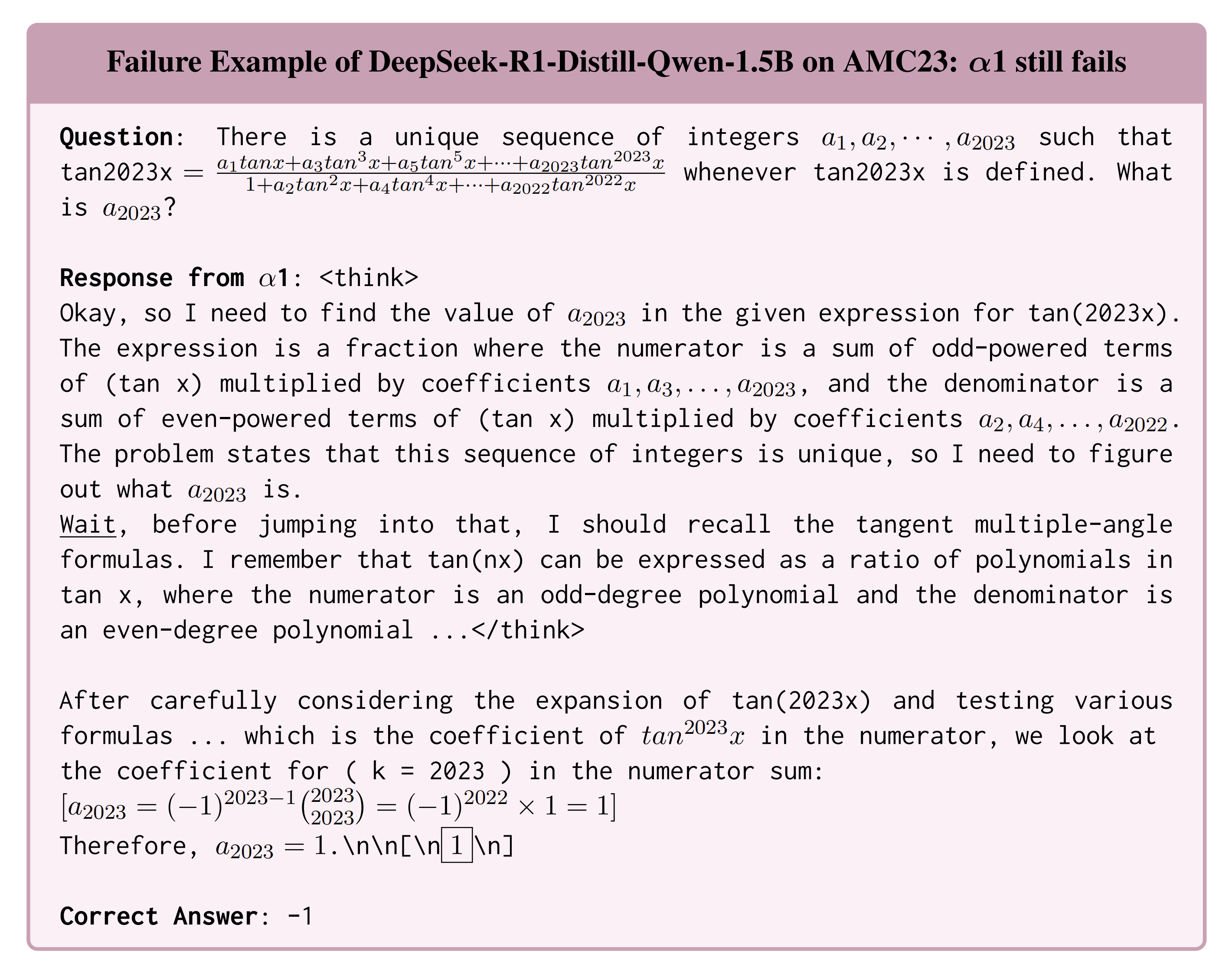

We present some insightful findings from evaluating three different ![]() LRMs, ranging from 1.5B to 32B across six reasoning benchmarks, including math, code generation, and scientific problem reasoning.

LRMs, ranging from 1.5B to 32B across six reasoning benchmarks, including math, code generation, and scientific problem reasoning.

💡 Slow thinking first, then fast thinking, leads to better LRM reasoning.

💡 Slow thinking can bring efficient test-time scaling.

💡 Slow thinking transitioning in high frequency is helpful.

represents

represents  moment.

moment.

@article{AlphaOne25,

title={AlphaOne: Reasoning Models Thinking Slow and Fast at Test Time},

author={Zhang, Junyu and Dong, Runpei and Wang, Han and Ning, Xuying and Geng, Haoran and Li, Peihao and He, Xialin and Bai, Yutong and Malik, Jitendra and Gupta, Saurabh and Zhang, Huan},

journal={arXiv preprint arXiv:2505.24863},

year={2025}

}